By Chris Rice and Kathy Tse

We recently demonstrated an industry-leading, new technology on our network that enables data at speeds of 400 gigabits per second. And we did it in a way that’s more efficient than any previous method. This is vital for our data- and video-hungry consumer and business customers. Here’s how we did it.

There were 4 unique aspects of this trial:

- A groundbreaking 400 gigabit Ethernet (GbE), using single-wavelength technology that enables the highest bandwidth service.

- A software-configurable OpenROADM photonic network to transport the wavelengths.

- An open source SDN (software defined network) as a control platform.

- A white-box packet switch that generates some of the traffic running across the network.

400G wavelength technology

A 400 GbE service can provide 4x the bandwidth across the network compared to the 100 GbE services that are used today. The increased bandwidth lets us pump more services down each lane. It’s like replacing regular buses on city streets with quadruple-deckers.

In the first phase, we split that 400 GbE across 2 different wavelengths of laser light, carrying half the bandwidth over each lane, and sent them across our long-distance backbone network. In phase 2, we fit all that data into a single wavelength, essentially turning our bus into a quadruple-decker bus. All the data traffic could travel on 1 optical lane!

Putting all the energy into one lane meant that the data could travel a shorter distance. This trial was done on our metro network, where the distances are cross-town instead of cross-country. Eventually, work in this area will allow us to increase those distances.

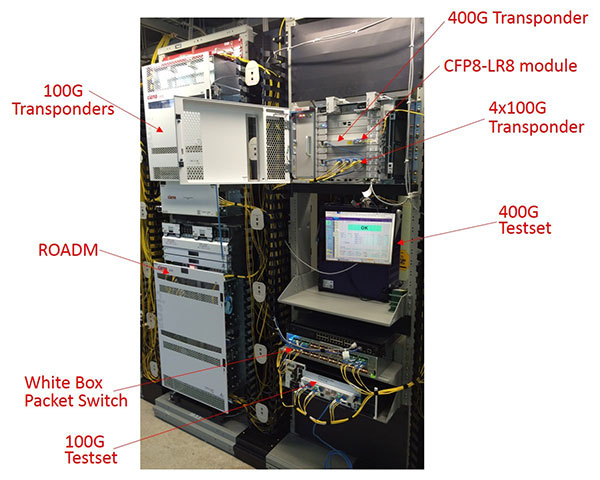

Creating a single-wavelength 400 GbE signal is huge. To connect customers, we’re using first generation 400 GbE clients (400GBASE CFP8-LR8 modules) based on a soon-to-be-ratified IEEE standard.

Two suppliers of these 400 GbE connector pluggable optics for our trial provided us with equipment to interconnect to the 400 GbE test sets that use the emerging standard. These clients are critical for us to interconnect to our customers and inside our offices, and we demonstrated that the 400G client modules were interoperable.

To our knowledge, the single wavelength 400 GbE signal sent between our offices is the first field demonstration of this technology.

Ciena provided the circuit packs that create the signals using a higher order modulation format (32QAM) and digital signal processing to transmit the high bandwidth on the single wavelength. Viavi provided test sets that were used to create a 400 GbE signal for our testing.

Network readiness over our OpenROADM systems

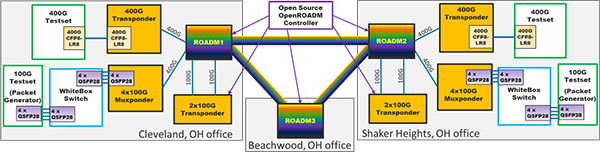

We ran our 400 GbE wavelengths across a deployed metro network in Cleveland. This network used equipment based on the OpenROADM Multi-Source Agreement (MSA), defined by a set of open APIs and models. This let us use different suppliers in our network.

OpenROADM also uses software programmable hardware. This allows wavelengths to be set up and moved remotely using flexible ROADM switches. Think of our network connections like highways. Now we can open or close lanes during rush hour traffic or network events. We did this in the trial to remotely set up the 400 GbE service, as well as existing 100 GbE services, and then move them to a longer route on demand.

Though the 400 GbE transponder is still under development and not yet defined in OpenROADM, we demonstrated a transmission distance of over 300 kilometers and 7 OpenROADM nodes in our lab. We expect the technology to continue to improve and the distances to grow. The neighboring 100 GbE wavelengths were fully OpenROADM compatible as defined in the specification.

Open-source SDN control

We showed the flexibility of our metro network using a controller based on open source software we created along with Orange’s technical team and others through the OpenDaylight Transport PCE project. Extensions were made for the 400GbE rate, which is not yet included in the models and APIs.

Our controller is based on the OpenROADM Yang models and uses a model-driven design to allow the automation. The controller abstracts the complexity of the ROADM network to allow a higher layer of control, such as the open ONAP controller to apply bandwidth as needed. This work aligns with our shift to an open and software-centric network, giving our customers more network flexibility.

Using this controller, both the 400 GbE and the 100 GbE services were provisioned across the open APIs. Then we moved to the second path on demand to simulate a failure of the primary path.

White-box packet switch

In an earlier news release and blog, we highlighted a white-box packet switch operating at 10 gigabits across our deployed network. Now we’ve extended that capability to a higher-capacity white box, and transport and control that high bandwidth across a 400 gigabit wavelength over our OpenROADM network.

Advances that show our customers we are committed to lead

Our customers need more bandwidth and flexibility than ever. Trials like this one push the envelope of technology and open automation to meet those needs. Working with our suppliers lets us learn about the readiness and limits of the technology while driving automation with our open and flexible software-controlled efforts.

Figure 1: 400GbE field trial configuration over OpenROADM in metro Cleveland, Ohio

Figure 2: Photos of 400G equipment tested over OpenROADM nodes in metro Cleveland, Ohio

Chris Rice - Senior Vice President – AT&T Labs, Domain 2.0 Architecture and Design

Kathy Tse - Director of the Photonic Platform Development group in the Packet/Optical Network organization of AT&T Labs